Few months ago we build 2 custom GPU rendering nodes. I’ve decided to share all the specs of what we ended up buying to make it easier to anyone who wants get something similar.

List of components:

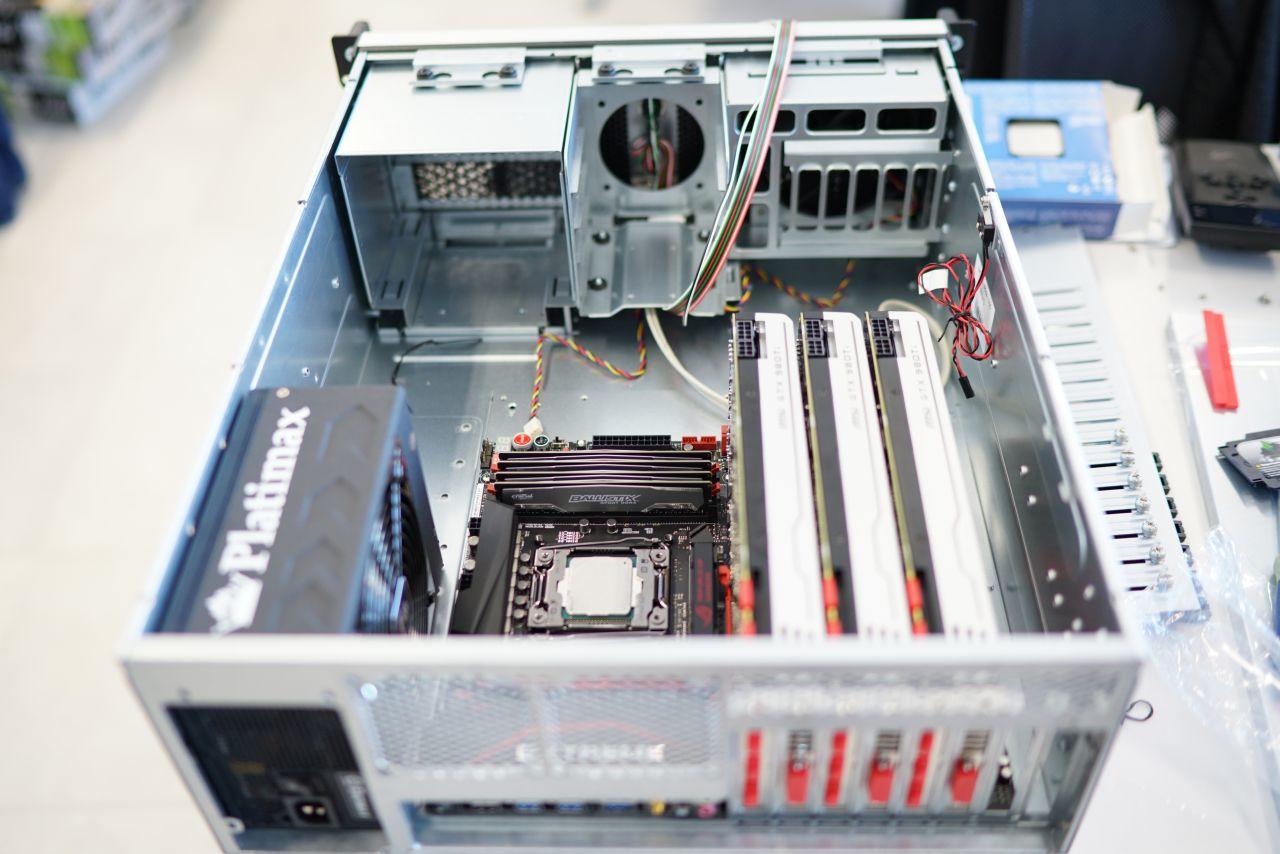

- Rack mount case – Chenbro RM413 4U + 8 PCI rear back window (55H103413B007 + 55H173413B001) – 142 Eur + 15 Eur = 157 Eur . You have to unscrew the rear window that comes with the case and replace it with extended one or instead look for Chenbro RM41300-FS81 which already has 8 slots. I wasn’t able to buy this one but it’s out there.

- Motherboard – MSI X99A XPOWER – 390 Eur . This one is relatively cheap and has 2 x 1Gbit Ethernet connection.

- PSU – Enermax Platimax 1500W – modular with 90-95% efficiency 290 Eur, enough to supply power for all GPUs,

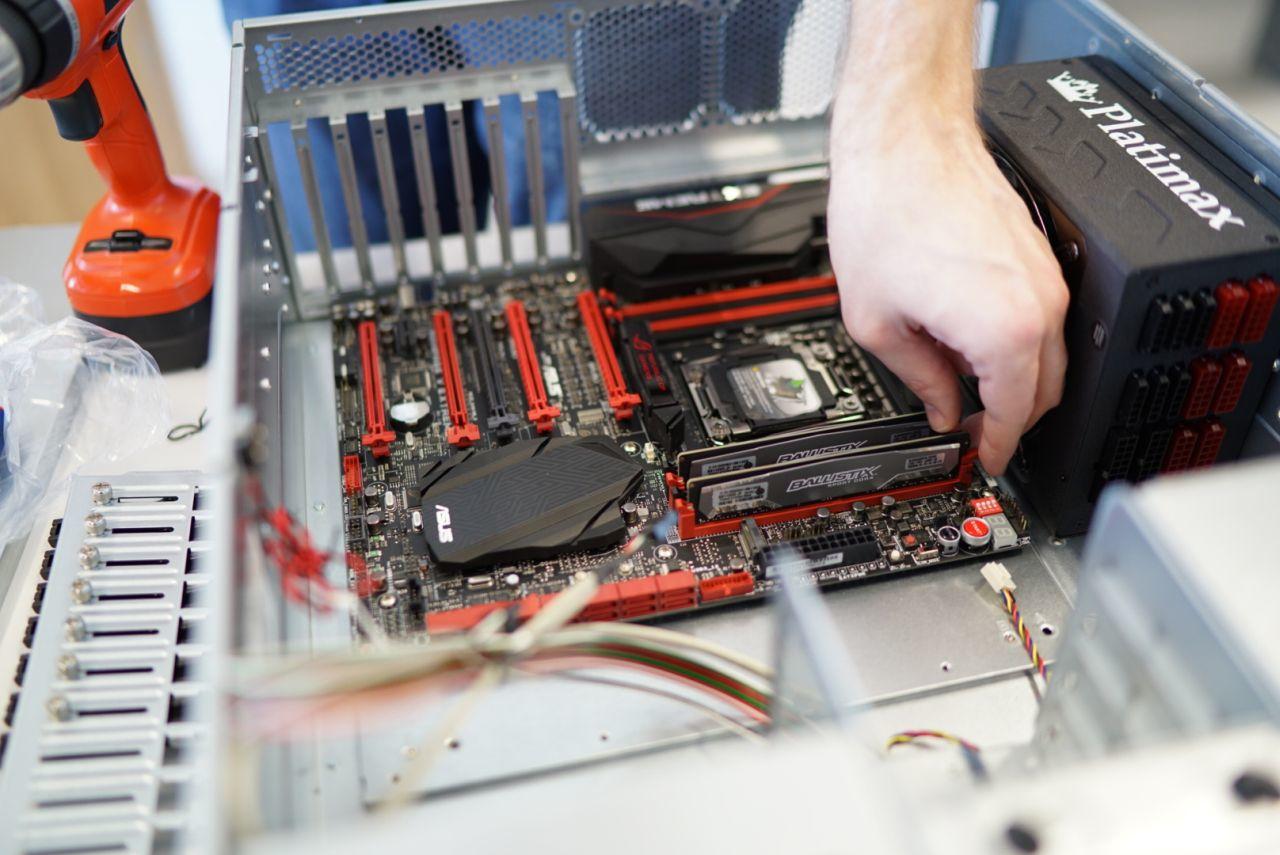

- Memory – Crucial Ballistix Sport 8GB DDR4 x 4 = 211 Eur.

- CPU – Intel i7-5930K 3.50GHz 15MB BOX = 560 Eur

- SSD – Samsung 250GB 850 EVO = 85 Eur.

- OS – Windows 7 PRO = 90 Eur

Total without GPUs = 1783 Eur

To put this into perspective. Before I decided to build those I got quotes for 4xGPU server nodes that would cost 7000 Eur. GPUs not included.

We ended up doing two configurations:

4 x Nvidia GTX TItan X 12GB at around 1150 Eur x 4 = 4600 Eur

Total cost = 6383 Eur (23% VAT included)

And

4 x Nvidia GTX 980Ti 6GB at around 2840 Eur with Asus motherboard

Total cost = 4723 Eur (23% VAT included)

Overall this is quite cost-effective solution if you need rack mounted nodes. You could use standard desktop case if you want, there are many around and they are easy to find. Look for 4-way SLI PC case and it will do the trick. If you’re really hardcore, you can go with some 7-PCI-E motherboard and custom case. For inspiration look at bitcoin mining builds.

And here are some photos from the building process:

Though I don’t have more photos from the build process. It’s quite straight forward. If you’ve built any PC in your life it will be just as easy. This is basically desktop build in rack mount server format.

One more note about cooling. Those builds are working basically 24/7 for 3 months now without any issues. Temperatures are in range of 78-84 while fan speed is auto managed by GPU (default) and is going at 60-70% so it still has some room in case things will get too hot. NVidia rates those GPU safe up to 91 degrees so I don’t think there are any reasons to worry about. At the time of this post up-time of both render nodes was 22 days (since last electricity malfunction). Depending on your environment temperatures you could add some fans to the case to help with airflow. I’m using stock setup at the moment.

So far those bad boys helped us with those shots (among many others) :

https://www.behance.net/gallery/33632113/Guitar-CGI

https://www.behance.net/gallery/34685953/Excavator-CGI

Best,

Tomasz Wyszołmirski

Hi Tomasz,

Thanks for your post here with so much useful information.

I’m using a 5930k cpu for a GPU build on a Gigabyte GA-X99-UD4 motherboard.

I note that you recommend keeping an old GPU to drive displays, and for this I have a GTX 780ti (16 x pcie lanes).

For GPU rendering I have 2 x GTX 1070(32 x pcie lanes). Another PCIE slot is used for an M.2 drive (4 x pcie lanes).

Suffice to say I’m mixing some current and older gen stuff here, but I’m using what I have and trying to make the most of it.

The problem I have is the 780ti is not showing up. I’m pretty sure (well kinda…) it’s because the 5930 is limited to max 40 pcie lanes and Im asking for 52. However I’m told that the 40 lanes will be allocated as required and it should work?

This leads me to my question –

With your 4 graphics cards each requiring 16 x pcie lanes (total 64) how is your cpu able to service them at an optimal level?

Perhaps I’m lost in the woods here… Hoping you can help.

Hi Tomasz, very good informations. I´m planning a build like this but I´m quite new to GPU rendering. About GPU, what´s the difference between founders edition and custom cards using them for rendernodes? I know there are some custom cards requiring 2.5 or 3 slots on the MB, but otherwise? Do this overclocked gaming cards work as well in VRay rendering.

Except the VRAM, which configuration You would prefer for arch exteriors and product visualization stills using VRay RT DR. 2 x 1080 Ti or 4 x 1070?

Founders Edition GPU’s are in general better with air cooling and stacking couple of them next to each other. Overclocked cards might not be the best option for using couple of them next to each other, but if you go for 1 or 2 that are distanced from each other it should work well without overheating issues.

I don’t recommend using DR in general. It works but network is usually a bottleneck. I would go with 2 x 1080 Ti because of 3GB of extra memory.

Best,

Hey Tomasz,

after reading your blog I was trying something similar and started with two GTX 1080 ti (Founders Edition). Currently I use a tower case and installed one GPU near the PSU and the other is directly above it.

But the problem is: when rendering with both GPUs, the upper GPU is getting too hot (84?) and as a result the performance is going down (from 1850 MHz to about 1500 MHz). I have tried to compensate it with additional fans and/or opening the side and top panel of the tower, but nothing helped. How comes you don’t have that problem with 4 gpu’s ? What can I do?

Best regards

Olaf

Hey Olaf,

You may need to adjust fan speed with something like MSI Afterburner (it works with most of the cards). There is something like fan speed / temperature and I usually knock it up quite high.

If that doesn’t help you may need to install some very strong fan in front of GPUs (front of the case) and this will help push the cold air through them. Look for fans with highest possible CFM parameter.

The cases I got for 4GPU builds came with some very powerful fans, but I needed to remove dust protection film as it was slowing down the air a lot, after that, even with closed case the GPUs are not overheating. Other than that I keep 25 degrees steady temperature with A/C.

Best,

Thanks a lot Tomasz, rising the fan speed via MSI Afterburner does the trick. Both cards stay nice and cool now. Octane benchmark went up from 375 to 385.

Best regards

Olaf

Hi Tomasz,

Since it has been a year I wanted to see how those consumer cards are holding up. I haven’t heard of anyone having any issues with cards dying under heavy load but I figured since you have been at the forefront of GPU rendering you might have some good insights.

Hi Joshua,

That’s an late reply but still relevant. So far we’re close to 3 years after switching to GPU rendering and not a single GPU died. I’ve been mainly choosing reference models and from Asus / MSI since those supposed to have best warranty (though I didn’t have to use it yet).

As for things dying there are some… Mostly those are fans (but not those on GPUs for some reason). 2 PSUs fans died on me and those had to be replaced as system got unstable. Had some case fans dying as well.

Overall there is some maintenance that needs to be done but very little compared to huge CPU render farm.

Best,

Hi Tomasz,

First of all, congratulations for your build and article, it’s very explanatory. The projects you’re using this for are awesome, as well!

I’m looking into assembling a similar render node and I have a few questions:

Did you have to strip the GPUs from their cases or did they fit as they were out of the box?

Did you have other options in terms of rack-mounted cases, besides the Chenbro you chose?

How come you didn’t go for the 1080 TI FE GPUs?

Thank you!

Hi Andrew,

GPUs fit as they are, no need for any adjustments.

Chenbro was the best solution I found in terms of price and fitting 4GPUs, but it might have changed.

I ended up replacing 980ti with 1080ti. Keep in mind that this blog post is 15 months old, 1080ti didn’t exist back than.

Best,

Tomasz

Hi Tomasz,

you wrote:

“I don’t know how I missed your comment. But regarding the PCI speed, from my tests it seems like it doesn’t matter as much with V-Ray RT. It will only affect the time it takes to transfer all the data to GPU, which even with 3.0 x1 wouldn’t be that bad. After initial loading, there is very little data going to and from GPU.”

Does that mean, that this rig would be totaly fine for rendering? https://www.miningrigs.net/?product=gray-matter-gpu-server-case-v3-0

I don’t see any reason why it wouldn’t work, but best is to test…

Hi Thomas. Love your and thanks for sharing so much information with us. Were looking in to buying a gpu cluster, but Im Wonderign about network speeds and how much dr rendering for example would affect the network. Do you have any experience with that?

Hi Tomasz

With rendering times at around 10 minutes per FHD frame – this would mean that a 3 minutes video shot at 24FPS would take around 10 days to render.

Is this correct ? Any issues with heating if you leave your station to work at full power for 10 days ? Electricity must cost a ton 🙂

We plan at building a rendering station this summer, using the future 2080 chips from Nvidia, maybe 4 x 2080 Ti with 64GB RAM – any chance we can drop down those times to around 2 minutes/frame ?

Thanks,

Hi Dragos,

In general on those nodes are frames render from 30s to 10 minutes. Recently we calculated some 4k shots that took only 1-2 minutes per frame. But there is no rule other than more complex scenes = longer the render. I did calculate that rendering on GPUs with taking into account electricity, render nodes costs, licences etc is around 4 times cheaper than than on CPU.

As for heating or issues, there were none so far. For Nvidia 2080 etc… I wouldn’t trust any rumors until there is solid statement from Nvidia.

Best,

Absolutely, nothing’s certain until Nvidia says so. A couple of days ago they’ve launched Titan Xp, with around 10% better performance compared to a 1080 Ti, but almost doubling the cost.

In this scenario, we’d be looking at investing around 10.000€ to build two nodes, each with 4 X 1080 Ti’s.

I will drop some additional information on this as it might be useful.

1080 Ti is the great deal with it’s 11GB of memory, but 1070 is still the best choice when it comes to power per dollar probably 6×1070 in some kind of open air case could be the cheapest render node.

I did some crazy estimations a while ago: https://www.dabarti.com/screens/ec027f8d5-d479-4e91-b9a7-198c4b01f4d6294642b5809-093b-4b61-b55a-de8f7b3dc505.png Assuming I would need 1 mln frames in a year, what would be the cheapest option.

New Ryzen CPUs are looking pretty good, but they are at least 2 times more expensive than Titan X nodes. But it might be a good choice if you need a lot of memory. But on the other hand Nvidia offers great professional Quadro GPUs with 24GB on board. Also dual Quadro P100 with NVlink can scale to 32GB of memory.

Anyway. Rendering on GPU is looking pretty good.

Tomasz, thank You so much for posting this! I’m building my own rig right now and I cannot decide between 5820K and 5930K. I will use dual GTX 1070 FE for GPU rendering with V-Ray RT (I don’t need much more at the moment). What is your stance on having a CPU with 28 vs 40 PCIe lanes? Is there any real difference between rendering performance while using 16x/16x and 16x/8x PCIe lane speed? The pricetag on the 5930K is substancially higher and I cannot decide whether it is worth the additional cost.

Any advice on that matter will be highly appreciated. Especially from someone like you.

Greetings from a fellow Pole.

Hi Sebastian,

I don’t know how I missed your comment. But regarding the PCI speed, from my tests it seems like it doesn’t matter as much with V-Ray RT. It will only affect the time it takes to transfer all the data to GPU, which even with 3.0 x1 wouldn’t be that bad. After initial loading, there is very little data going to and from GPU.

Best,

Thank You very much for your response. I went with 5930K anyway (I got it for a great price-only 50$ more expensive than 5820K which was a bargain if you ask me). Double GTX 1070 FE should do the trick for now with plenty of headroom for future improvement, including additional GPUs. Anyway-thanks again. I admire your work. Keep it up!

Hello, I have recently bought 4x EVGA GTX1080 FTW DT GAMING ACX3.0 8GB cars for my new rendering workstation. However, the Nvidia guy kept telling us the 4way SLI won’t work and the GTX1080 only supports 2way. I used to have a GTX980ti SLI for rendering. Will it have a huge performance boost or it will just work like one card like the Nvidia guy said?

Thanks

You don’t have to connect cards with SLI for GPU rendering. RT GPU will utilize them as long as you can see them in your system and they have drivers installed.

The Nvidia guy said GeForce is never recommended for 3ds max but Quadro. Did you try out both of those cards?

GTX cards are perfect for GPU rendering. Check my guide https://dabarti.com/vfx/short-guide-to-gpu-rendering-with-v-ray-rt/ .

Nvidia guy might be referring to viewport and 3ds max stability as Quadros are supposed to be better at that but I personally never had issues with GTXs.

Best,

Hi, when you say: 4 x Nvidia GTX 980Ti 6GB at around 2840 Eur with Asus motherboard

What ASUS motherboard?

It was Asus Rampage V Extreme Intel X99 LGA2011-3 BOX . Most likely it is a bit overkill, I’m thinking about revisiting this with an most affordable build possible… Something with open air case and 6 GPUs could work be interesting.

Hello Tomasz, first of all thank you for your article. Secondly are you using GTX 980s etc.. as opposed to the quadro cards because they’re a more cost effective solution?

Yes. That’s the main reason. They are more cost effective, though there are claims that Quadros are more stable and reliable.

Personally I never got any issues with GTX cards and I can recommend them for rendering.

Tomasz, thanks a lot for this great info and the script you have done for VrayRT. I have tried GPU rendering few years ago and I didn’t like it. However, after reading your post I gave it another go and.. it’s great!. Just on time, as I was planning to upgrade my PC.

I am wondering if the core i7 6700k processor will be able to run 4 GPU’s and a PCIe SSD, as I have noticed that it has only 16 PCIe lanes. Where it gets a bit confusing for me is that some of the motherboards offer 4 way SLI.

I thought I would ask you as you have more knowledge in this department.

Vas, GPUs for rendering are not supposed to be connected through SLI, it will work without it just fine, it may even slow down the rendering. When it comes to PCI lanes and PCIe speed as far as I know it may only affect the time it needs to transfer all geometry, textures onto GPGPU memory, but difference would be so small that overall performance will be pretty much identical.

Best,

Tomasz

Thanks Tomasz, I have done some research and those motherboards have PLX chips to split the PCI lanes to the GPU’s. As much as I like the 6700k CPU I will probably go for a core I7 6850k just to avoid any issues.

One other question regarding V-ray RT. On production rendering mode I noticed a high peak usage of the CPU , for few seconds, while the light cache is calculated and then the GPU kicks once this is complete. Is this normal? Is this not suppose to be calculated by the GPU? This is not the case in ActiveShade mode where Light Chace is not used.

Light Cache is always calculated on CPU at the moment.

Hi guys,

Thank you for the quality informations on the site, and congradulations for your work!

I am thinking about building a system with i7 4790k, 32gb ram, 2 x gtx690 4gb for rendering. Do you think is a good system for rendering architectural interior and exterior scenes ( houses, offices, etc) with vray rt ?

Hi Daniel,

I wouldn’t recommend 690 for GPU rendering. Actually I think the best option now is the 1070 8GB, way better performance, double the memory, lower power needs.

Check out my guide to GPU rendering, as I wrote quite a bit about hardware and best current solutions – https://dabarti.com/vfx/short-guide-to-gpu-rendering-with-v-ray-rt/

Best,

Tomasz

Have you used Red Shift on this setup yet? If so what are your render times on images like the ones you’ve shown?

Im looking to build a render farm soon.

Thanks

paul

Hi Paul,

I tried Red Shift but on 970 and than later on 1070 to compare against other GPU engines, but I ended up sticking with V-Ray RT.

Render times for 1080p frames are usually in range of 2-6 minutes for our shots. It can go up to 10-15min for heavy scenes.

Best,

Hello I want build a 4x GTX Titan Workstation. A GPU Workstation for rendering. Because I´m gone render everything with the GPU, how much CPU you would recommend for the new Workstation. icore 7 o xeon? Other applications that are not rendering are running very smooth with a normal CPU.

Right now i have this setup:

CPU: 2.66 GHz Dual Quad Core Xeon X5550 (8core / 16 threads)

RAM:32GB 1066 MHz DDR3

GPU: Nvidia GeForce GTX 770 3GB

iOS: Win 8

5 year old.

Its ok for work, it just need more speed for rendering.

Hi, ever you render something like this scale before with this setup ? https://www.flickr.com/photos/phamduongvu/23293917054/in/dateposted-public/

Love to study more

Looking forward to hearing more details about using RT in production. Keep up the great work. Currently debating whether to build dual Xeon or quad GPU setup and seeking info!

Hi Tomasz

Is it possible to use this machine as an DR node from within Max or Maya with RT and the GPUs?

Hi Tomasz, is it possible to use this as a DR node from another workstation that doesnt have an RT compatible GPU?

I was thinking of something like this in order to speed up lookdev and not having to send out frames individually to each node

Yes It’s possible. You just have to switch off “use local host” in DR settings.

I have z97 pro ac wifi 32 GB ddr3 ram dual GTX 970 4790K CPU if I upgrade to dual GPU to GTX 980 TI do you think I could take render in 15 -20 min ?

Dual GTX 980Ti Would be definitely way faster than 4790K. When it comes to comparing GPUs. You can test your render times on 970 that you have and use it as baseline. 980Ti would be around 60% faster. I will post in near future a little bit more details about using RT in production.

I do architectural design and I on my pc it takes around 30-40 min Im trying to understand if I should go for workstation pc or invest on graphic card 🙂

Basically what Im asking is that which way is the fast way to take render dual chip set mother board and single GPU or single cpu +4gpu

can you pls tell me how long does it take to render something professional print out board ?

What exactly do you mean by “professional print out board” ? In general we render animations at 1080p if you check our vimeo page most of the latest clips were rendered on this farm with times per frame usually in the range of 1-10 minutes depending on the complexity. Sometimes it does go up to 20-30 minutes but that is quite rare.

awesome! thanks for the anwswer.

Hello!! nice setup, I m trying to build this atm. I a few titans and another rig that is going with 980ti.

how you deal with the temps with all the cards stacked like that?

what is the highest that you have.

Temperatures are in range of 78-84 usually. Fan speed is auto managed by GPU (default) and is going at 60-70% so it still has some room in case things will get too hot. It’s going almost non-stop for 3 months so far, so I would say it’s very stable.

Just checked. Both nodes have up time at 22 days at the moment. Since last electricity malfunction 🙂